In this article, I will share the latest Genshin Impact Version 5.6 redeem codes with you and show you how to use them easily. Genshin Impact is one of the most popular open-world RPG games, and players always look for free redeem codes to get Primogems, Mora, and other in-game rewards. HoYoverse regularly releases new…

Microsoft Azure Data Scientist Associate (DP-100) Quiz Answers + Review

In this post, I’m sharing a quick overview of the Microsoft Azure Data Scientist Associate (DP-100) Exam Prep Professional Certificate on Coursera — a 5-course program thoughtfully designed to help you build the practical skills needed to step confidently into a career in Data Scientist. If you’re currently working your way through this professional certificate (or thinking…

Prepare for DP-100: Data Science on Microsoft Azure Exam Answers + Review

In this post, I’m sharing a quick review of the Prepare for DP-100: Data Science on Microsoft Azure Exam course, along with important insights to help you finalize your readiness for the DP-100 certification exam. Just completed this fifth and final course in the Microsoft Azure Data Scientist Associate Professional Certificate? Then you’re right at…

More Software Engineering Jobs in Japan for Expats – April 2025 Edition

Are you dreaming of working in Japan as a software engineer? Whether you’re an experienced developer or just exploring opportunities abroad, Japan is actively hiring skilled software engineers — especially those who speak English. In this post, we highlight the latest Software Engineering Jobs in Japan for Foreigners, including positions that offer visa support and…

Perform data science with Azure Databricks Quiz Answers + Review

In this post, I’m sharing a quick review of the Perform Data Science with Azure Databricks course, along with useful insights to support your prep for the DP-100 certification exam. Just completed this fourth course in the Microsoft Azure Data Scientist Associate Professional Certificate? You’re now deep into the path toward certification, and this course…

Build and Operate Machine Learning Solutions with Azure Quiz Answers + Review

In this post, I’m sharing a quick review of the Build and Operate Machine Learning Solutions with Azure course, along with helpful insights for anyone getting ready to take the DP-100 certification exam. Just wrapped up this third course in the Microsoft Azure Data Scientist Associate Professional Certificate? You’re getting closer to the DP-100 exam,…

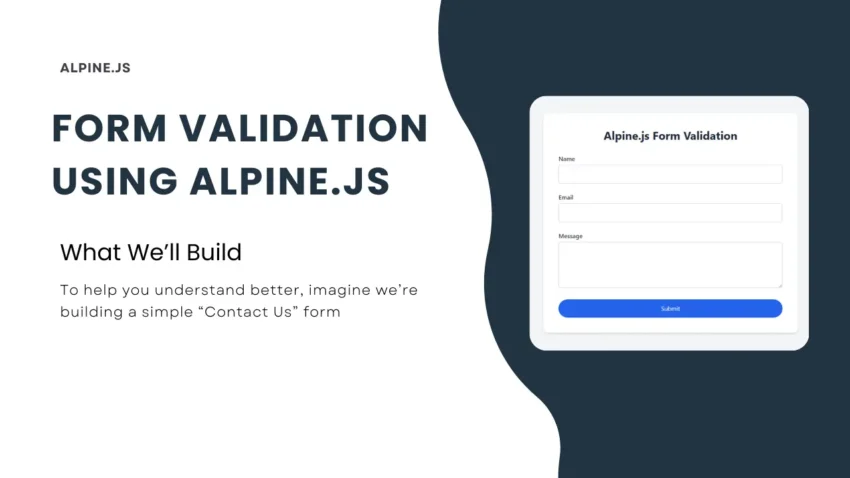

Form Validation using Alpine.js

In this post, I will show you how to add Form Validation using Alpine.js. It’s very easy to do, and I think you’ll really enjoy how simple it is! Alpine.js is a lightweight JavaScript framework designed for minimal effort and maximum interactivity. In this article, we’ll walk through building a simple client-side form validation system…

Microsoft Azure Machine Learning for Data Scientists Quiz Answers + Review

In this post, I’m sharing a quick review of the Microsoft Azure Machine Learning for Data Scientists course, along with key insights to help you prepare for the DP-100 certification exam. Just finished this second course in the Microsoft Azure Data Scientist Associate Professional Certificate? The DP-100 exam is your next big step, and this…